Long-term digital archiving is actually much more involved than it appears. On one hand, you might think you can simply make a copy of something and store it indefinitely. On the other, it would be naïve to consider this a secure long-term storage method.

Numerous natural factors, including magnetism, gravity, and environmental conditions (temperature, moisture, dust, and etc), can degrade our data. For digital data, it seems that the technology itself and magnetism pose greater threats than climate related factors, especially for spinning media.

In the late ’90s, I thought CD-Rs would be a reliable backup mechanism. I made numerous copies, only to discover in recent years that many no longer work, despite being stored in a moisture-free environment, inside sleeves and boxes. In some cases, parts of the discs adhered to the sleeves upon removal, or the coating was visibly deteriorating.

Magnetic “safer” than digital storage for archiving?

I have hard drives from the ’90s that still function perfectly, without any SMART errors, after tens of thousands of hours of use. Even drives from less reputable brands (like Maxtor) still operate well in some cases.

Are spinning hard drives suitable for long-term archiving? Probably not. Spinning discs can seize after sitting unused for extended periods, although it’s unclear how long this takes and under what conditions. Nevertheless, I’ve had success with very old HDDs (around 100MB circa early 90’s) purchased on eBay, with most functioning properly. Recovering “wiped” drives recovered data from them.

The internet offers much speculation about long-term data storage, but there are no definitive answers. Some suggest using Verbatim archival discs, which cost $5-10 each and offer only 100GB of storage These discs are slow to write (1-2x speed for 100GB) and purportedly last for hundreds of years- nobody alive then will know for sure. (I hope 1x isn’t 150KB/sec like it was for CDR’s).

There are also concerns from people about bit rot on SSDs and digital storage if the device isn’t powered every few years. I have an SSD from 2015 that wasn’t powered until recently, and the data was intact, though I hadn’t verified every piece of data back in 2015.

A BIG aside…(insert wavy blurry fade to another thought transition) Couldn’t sleep…

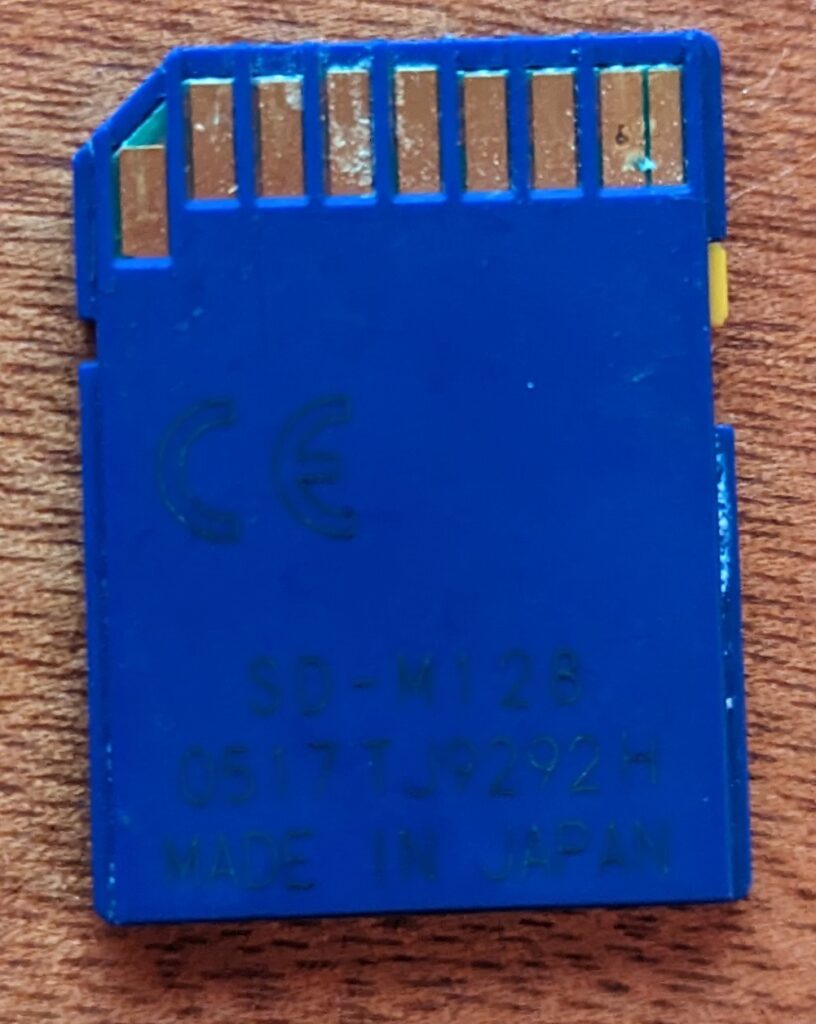

The Story of the loneliest SD card in Florida…

After writing this part above about old media longevity, I went through my “small parts” box remembering that a friend mailed me an SD card he found on the street. He said:

“I found them in Florida in the dresser on the side of the road waiting for the trash man to come get it I opened up the drawers and there was a bunch of stuff in there I took that was one of the things”

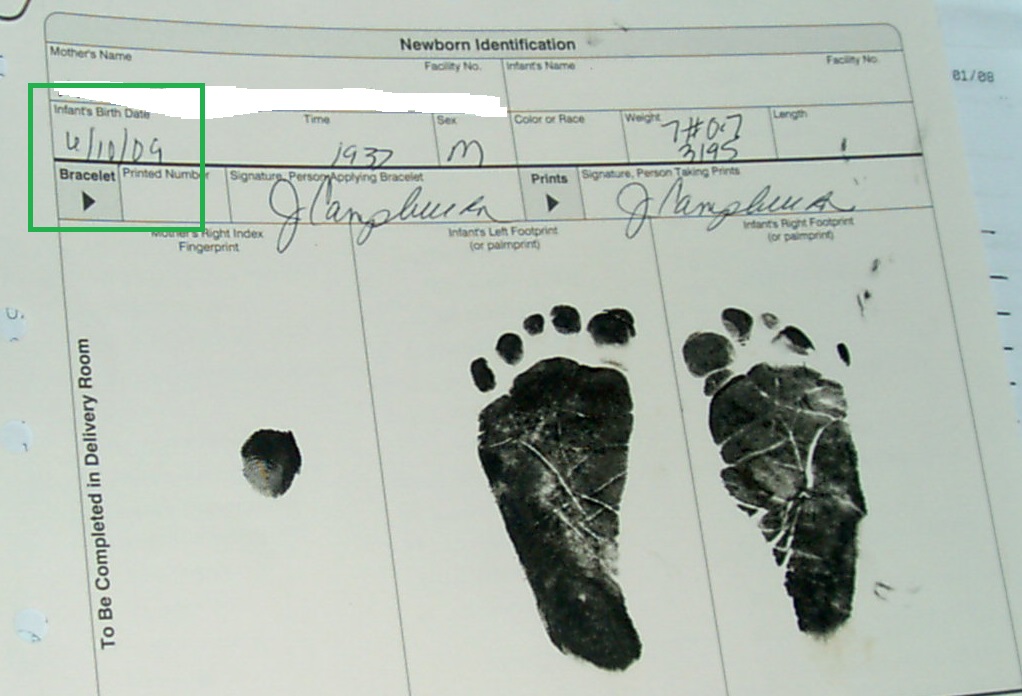

I thought I had tested it out and it was dead but forgetting that I put it again into an SD reader and voila, a bunch of very much flash-on-close-up-pictures-of-a-baby-coming-out-of-his-mom appeared. No, that’s not a link.

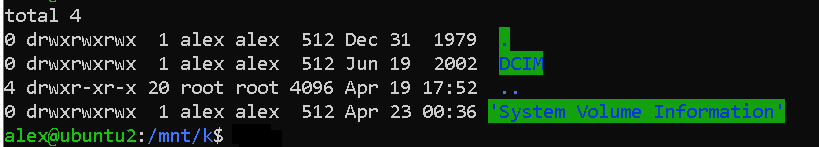

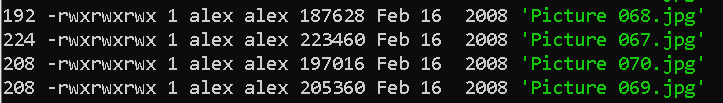

Hundreds of pictures..let’s dig with Exiftool:

Data looks good on this one- lets try all of them:

alex@ubuntu2:/mnt/k/DCIM$ for file in *.jpg; do exiftool -warning “$file” | grep -q “Warning” && echo “Corrupt image detected: $file”; done

alex@ubuntu2:/mnt/k/DCIM$

Good to go. (And yes I use a very lightweight LLM to crank out bash scripts for me all day..)

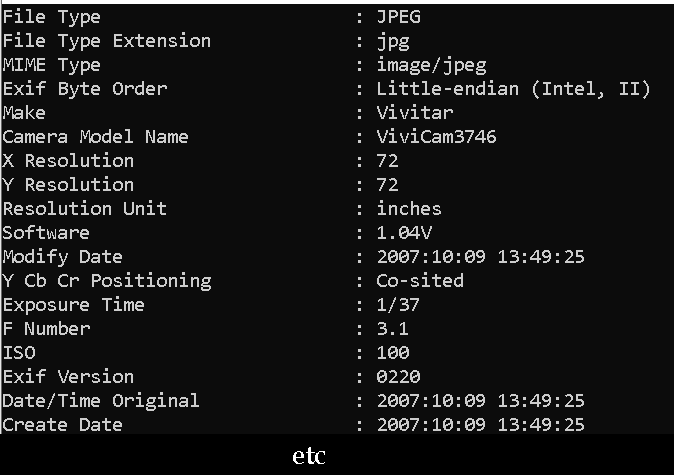

Metadata looks good and looking up some figures here I see that the Exif version of 2.20 came out in 2002 (2.21 came out in 2003). In fact Exif has had a really slow release schedule- about 12 releases since 1995. TLDR; this camera was a piece of shit in 2002 and well worn by 2007 when the picture may have been taken (few people set clocks on these cameras I’m sure). Still, pretty convincing this is a 15+ year old SD card that was not only found outside on the street in Florida and well worn, but that it seems to be in perfect digital shape.

Anyway, the sensor was only generating a sub-1MP image and a very very odd shutter speed on this particular image. Could have cranked it all the way up to 3MP if the operator was daring. Guess not.

Megapixels : 0.786

Shutter Speed : 1/37

Oh look here’s a folder with giant 3MP images..they WERE daring! Let’s see if we can figure out the actual time frame from the pictures…

Oh wait..nevermind.

and the story checks out..and the camera date/time seem to be set right!

Bit rot be damned! Maybe the oppressive Florida humidity keeps bits together?!

(Creature from the Black Lagoon pinball reference)

Distributing Risk…

To distribute risk, I considered making multiple copies on different media types—a spinning disk, a PC SSD, and perhaps a rugged flash drive—though I decided against using CD-Rs. That’s annoying though. To make a copy on a different medium of all of your important data? That may be good if all you do is store Word docs, but not for media.

Could analog be a safer archival medium? Transferring data from SSDs and HDDs to LTO tapes, which hold up to 6TB uncompressed, might be prudent considering the incredible sizes of HDD’s now (Seagate announced a 30TB spinning drive in January). OTOH, compression might reduce the likelihood of future data recovery.

Distributing archive data to multiple cold storage providers is not a bad idea if you want to pay-to-play to get it back (at least today) and spend the month uploading it. Checking Deep glacier (or just Glacier now?), AWS is charging $3.60 a month. They will hold your data hostage, I mean, will archive your data indefinitely for $3.60 a month per TB. If you want it back, you are going to pay! Data OUT is $9.00 for one TB. Going over to Azure for cold storage is a similar story. So although quite guaranteed to last as long as Amazon and Microsoft are around, it won’t be cheap.

Rants onward..

I own a metal (tin) record from my family that’s about 100 years old. Despite being stored in various unprotected environments, the data, the sound—although crackly and crunchy—is still understandable. Analog for the win. I have 10 year old CDR’s in cases, in boxes, where the coating has worn off. No good.

Should we consider converting our digital data to analog for archival purposes?

Should we consider converting our digital data to analog for archival purposes?

Parity via software (aka Parchives, get it?)

Parity files (pars) are essentially the software equivalent of RAID and have been useful (to me) for recovering incomplete and damaged files from early failed downloads from Usenet. I don’t see a lot of discussion over it at r/datahoarder but maybe they have other strategies. Programs that create Parchives like Multipar attempt to create parity files automatically after compression, though I’ve had trouble implementing this with the latest software versions. Considering the use of parity files to safeguard backups, especially for large data volumes, might be worthwhile. Perhaps figuring out the percent chances of failure over time for a medium and generate that percent of parity files..Or more. Distribute those several “clouds” for safety. Maybe in combination with cold storage there’s a happy medium for parity files that can recover x stuff and not be a bank drainer when you want to get it back.